Federated learning in the EOSC

Basics of federated learning. Tips and tricks. - Khadijeh Alibabaei (KIT)

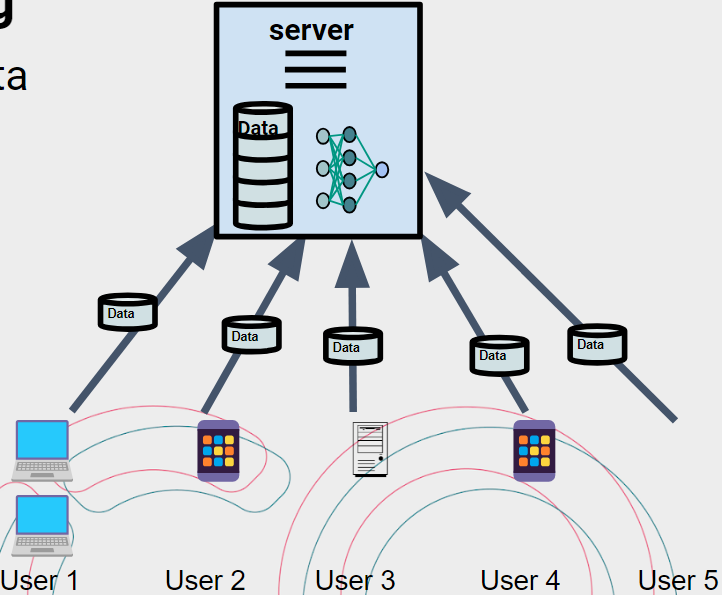

Centralized Learning in Machine Learning

About

- Refers to the traditional approach where all data is gathered and stored in a central location to train a machine learning model.

- Involves collecting and combining data from multiple sources into a single dataset before training the model.

Challenges

- Data Flow Management

Manage the transfer of large columns of diverse data quickly and accurately across different organizations. - Scalability.

- Communication Overhead.

- Intense competition within the industry.

- Data Privacy

Ensuring compliance with strict data protection regulations , such as the GDPR and EU AI ACT.

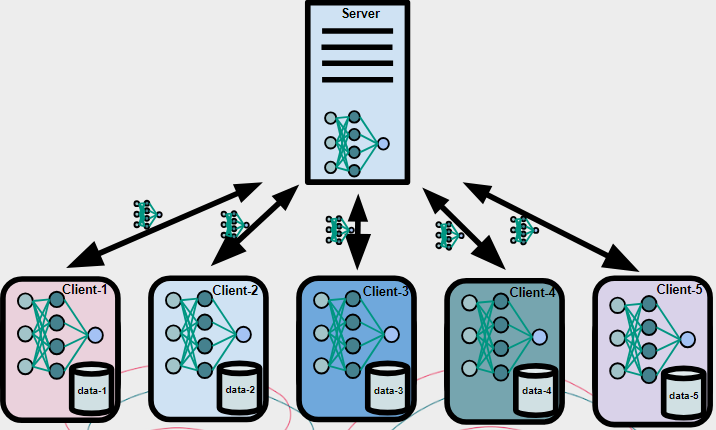

Federated Learning in Machine Learning

A method that facilitates multiple peers to collaboratively learn a common prediction model by exchanging model weights while keeping the sensitive data on the local devices

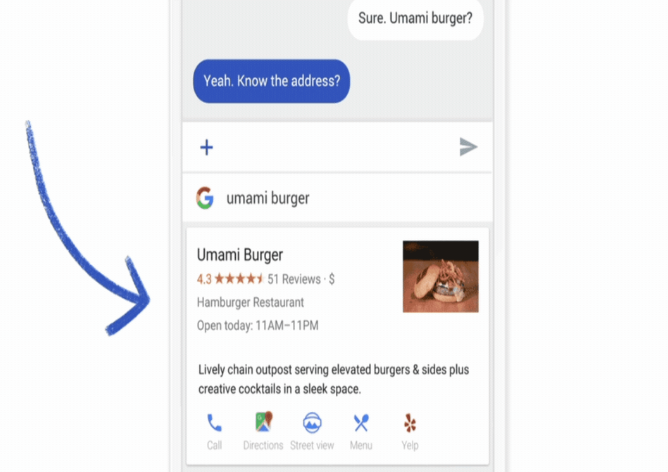

- Google already used FL in Gboard Android. When Gboard suggests a query, your phone stores context and interactions locally. Federated Learning uses this to improve Gboard’s suggestions.

- Apple has employed federated learning to improve Siri’s voice recognition capabilities while maintaining user privacy.

- Predicting oxygen requirements for COVID-19 patients in the ER using chest X-rays and health recorde (Muto, R., et.al. (2022)).

Federated learning - categories

Federated Learning can be categorized as (Khan et al. (2023)):

- Data distribution:

- Cross devices

The model is decentralized across the edge devices and is trained using the local data on each device. - Cross silos

The clients are a typically smaller number of organizations, institutions, or other data silos. - Architecture:

- Centralized Federated Learning

Server coordinates the training. - Decentralized Federated Learning

The communication is peer to peer. - Learning model:

- Horizontal Federated Learning

Each party has the same feature space but different data samples. - Vertical Federated Learning

Datasets of each party share the same samples/users while holding different features (Liu, Y., et al. (2023)).

Workflow in FL: Communication Strategies

- Scatter and gather

Global model parameters are distributed to client devices for local training; updated parameters are then aggregated. - Cyclic Learning (Chang, K., et al. (2018))

The server selects a subset of clients. Training is done following a predetermined sequential order set by the server. - Swarm Learning (Warnat-Herresthal, S. (2021))

A decentralized subset of FL where orchestration and aggregation is performed by the clients.

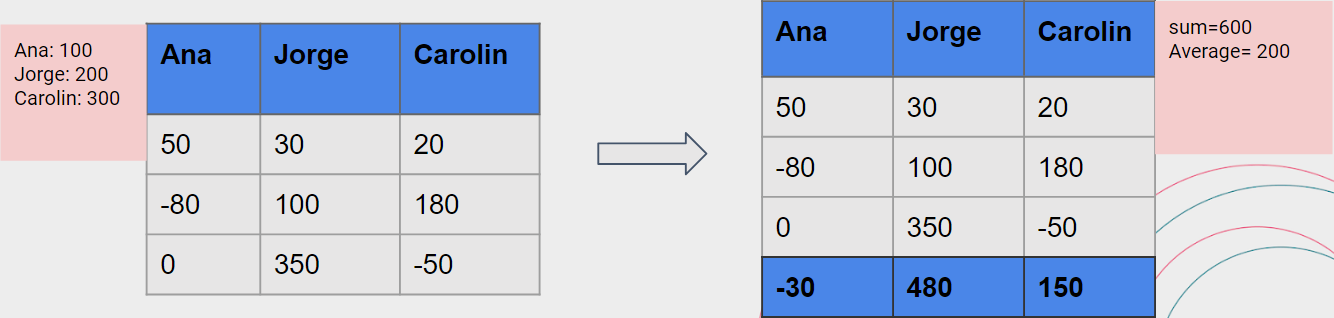

Model Aggregation

Model Aggregation in FL is a further development of distributed learning that is specifically tailored to the challenges of unbalanced and non-independent, non-identically distributed data (non-IID).

- FedAvg

Local weights are collected and aggregated again after local training, using weighted average. - FedProx

Loss function added to penalize the local weights of clients deviating from the global model. - FedOpt

Added option of using a specified Optimizer and Learning Rate Scheduler when updating the global model (like SGD to aggregate the weights of the model). - Scaffold

Added correction term to the network parameters during local training by calculating the discrepancy between the global parameters. - Ditto

Method for federated learning that improves fairness and robustness by personalizing the learning objective for each device.

Reconstruction attack (Truong et al. (2021))

- The original training data samples can be reconstructed from the model weights.

- Membership tracing i.e., to check if a given data point belongs to a training data set, or when a participant whose local data has a certain property, joined collaborative training.

Solutions

- Data Anonymization

A technique to hide or remove sensitive attributes, such as personally identifiable information (PII) (Narayanan, A.& Shmatikov, V. (2008)). - Differential Privacy (DP)

Provides a formal definition of privacy by introducing noise to query responses to prevent the disclosure of sensitive information. Differential privacy mechanisms include Laplace noise addition, exponential mechanism and more. - Secure Multi-party Computation (SMPC) (Zapechnikov (2022))

A cryptographic technique that enables multiple parties to jointly compute a function over their private inputs while keeping those inputs confidential.

- Homomorphic Encryption (HE) (Behera et al. (2020))

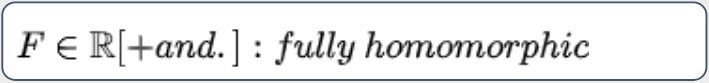

Allows computations to be performed on encrypted data. - Fully Homomorphic Encryption (FHE)

Allows to perform any number of operations.

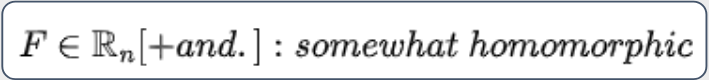

- Somewhat Homomorphic Encryption (SWHE)

Limits the number of operations that can be performed on encrypted data.

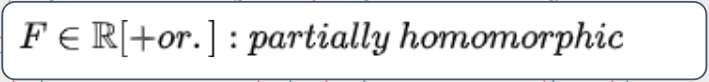

- Partially Homomorphic Encryption (PHE)

Allows only one type of operation to be performed.

Poisoning attacks on Federated Learning (Truong et al. (2021))

During model training in FL, participants can manipulate the training process by introducing arbitrary updates, potentially poisoning the global model.

Solution

- Model Anomaly Detection (Fung, C., Yoon, C.J., Beschastnikh, I., (2018) and Jagielski, M., et.al. (2018)).

- Not applicable when using secure model aggregation.

This problem needs more research.

Model FL Frameworks

Flower

- Flexible, easy-to-use and easily understood open-source FL framework.

- Framework-agnostic meaning that nearly every ML model can be easily migrated to the federated setting.

- Well-suited for research and study projects.

NVIDIA Federated Learning Application Runtime Environment (NVFlare)

- NVFlare is a business-ready FL framework by Nvidia.

- It supports a variety of models.

- NVFlare is framework-agnostic.

TensorFlow Federated (TFF)

- Developed by Google.

- Specifically designed for compatibility with TensorFlow.

- Integrated smoothly with existing TensorFlow workflows.

PySyft/PyGrid

- Python library for secure Federated Learning.

- Developed by OpenMined.

- Compatibility with popular deep learning frameworks like PyTorch and TensorFlow.

Federated AI Technology Enables (FATE)

- Open-source Federated Learning platform developed by WeBank’s AI Group.

- Business-ready FL frameworks.

- The framework comes with a large number of modules.

- It has backend for the Deep Learning libraries PyTroch and TensorFlow.

Conclusions

Key considerations for Federated Learning

- Optimizing Client Selection

- Use techniques to improve the response efficiency of end devices.

- Select customers based on data quality and reliability.

- Aggregation Algorithm Selection

- Choose the most suitable algorithms for data aggregation.

- Consider scalability, efficiency and accuracy of algorithms.

- Framework Customization

- Tailor framework selection to meet specific task requirements.

- Security Enhancement

- Implement robust security measures for communication and data sharing.

- Ensure encryption, authentication and privacy-preserving techniques.

- Compliance and Ethical Consideration

- Adhere to data privacy regulations and ethical guidelines.

- Positive aspects of FL

- Data transferring minimization.

- Build a larger and more diverse dataset.

- Train a more general and global model.

- International collaboration.

- Considerable aspects

- Security issues like model poisoning.

- Biases and Fairness

- Interpretability.