Understanding threats in federated learning

Understanding threats in federated learning. - Alberto Pedruozo-Ulloa (atlanTTic Research Center, Universidade de Vigo)

Outline

- Introduction: Conventional vs Federated Learning.

- In the line of fire: Privacy Threats and Attacks.

- Gossiping adversaries: Honest but Curious.

- Into the dark side: Getting Malicious.

- Privacy metrics: Can we measure the Privacy Leakage?

- Conclusions*: Episode PETs - a new Hope

Introduction: Conventional vs Federated Learning.

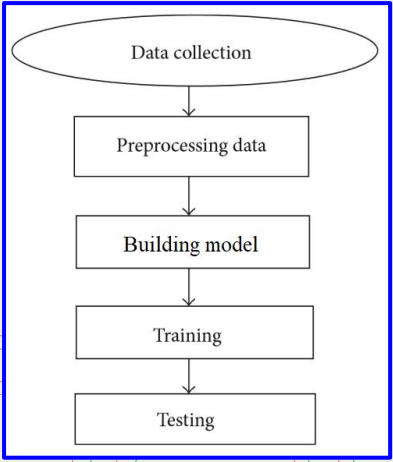

High-level Workflow in Machine Learning

Gather enough data

- Data collection

- Gather relevant data.

- Preprocessing data

- Clean and prepare the data for analysis.

Train a model

- Build an ML model (choose an adequate model, model training, hyperparameter tuning, evaluate the model, etc.).

Deploy the model

- Use the trained model for your application.

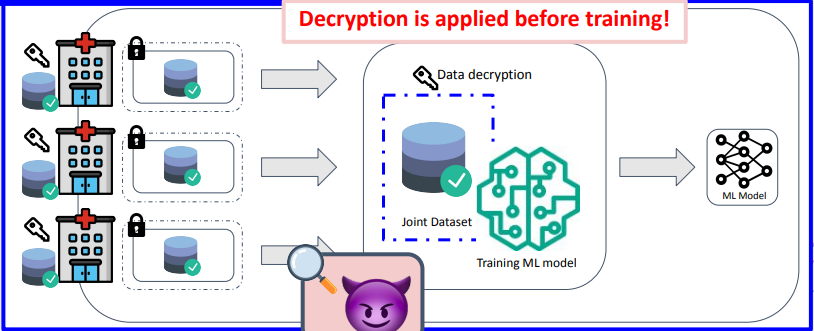

What if data comes from different sources?

All local data must be sent to a trusted party.

- Encryption can be applied to protect data in transit and at rest.

- However… we must still trust the party doing the ML training!

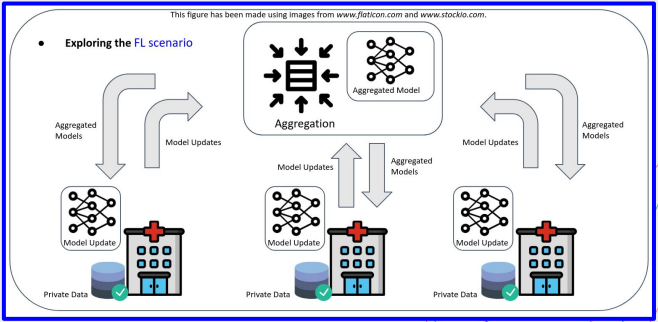

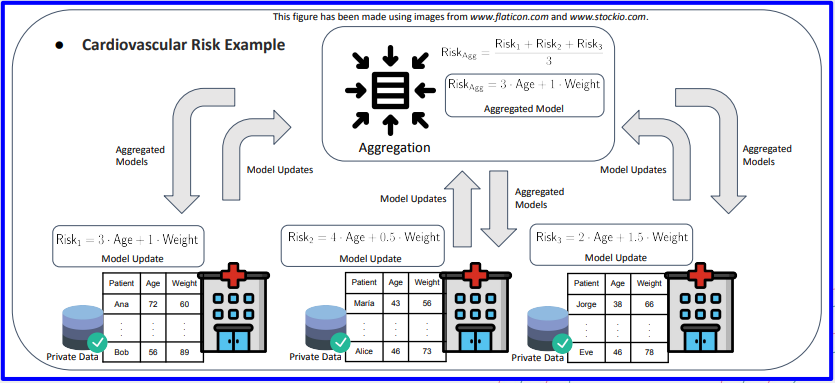

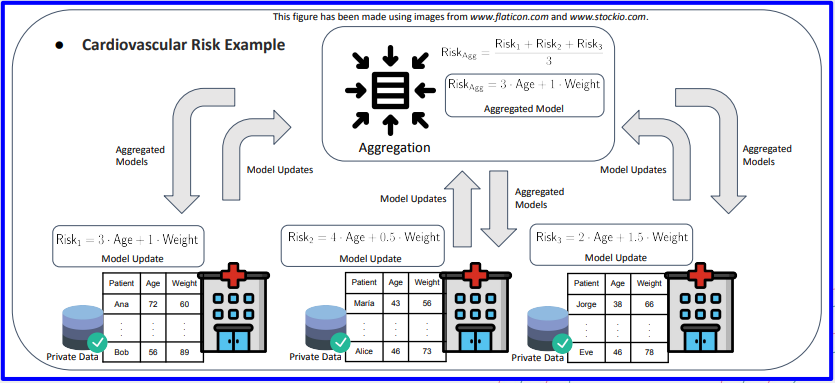

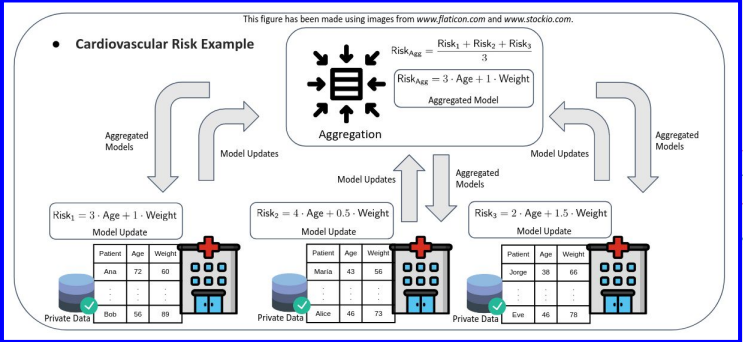

Federated Learning

- Training without explicitly sharing data.

FL allows the training of ML models without explicit sharing of training data. - Only local updates are exchanged.

- Cross-silo FL

A model is built from the training sets of a reduced number of servers.

In the line of fire: Privacy Threats and Attacks.

Some example attacks

- Is there a specific person in the database?

- Can we reconstruct attributes of people in the database?

- Can either the Aggregator or any Data Owner poison the updates?

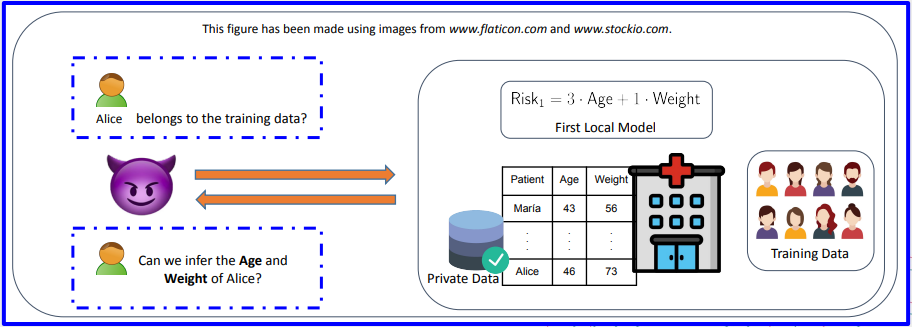

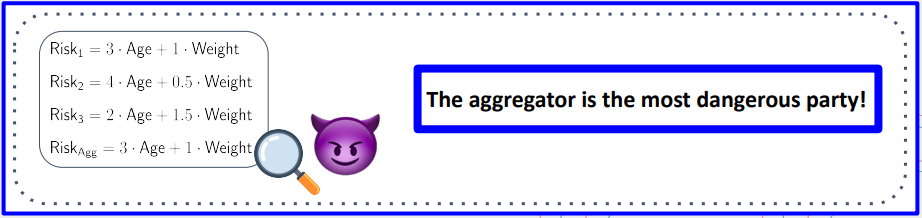

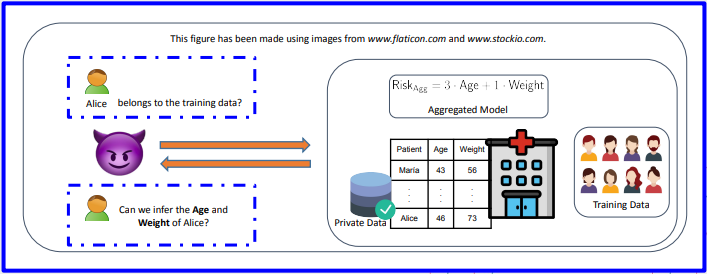

The power of the aggregator

- Initially proposed to avoid moving the training data out.

- Reducing communication costs and “ensuring data privacy”.

- Some example attacks:

- Is there a specific person in the database of a particular hospital?

- Can we reconstruct attributes of the people in the database?

Membership inference

- General cancer risk: 350 per 100000 people (aged 45-49)

- “Cancer risk” knowing that specific person is contained in the training data: 1 per 2 people

Gossiping adversaries: Honest but Curious.

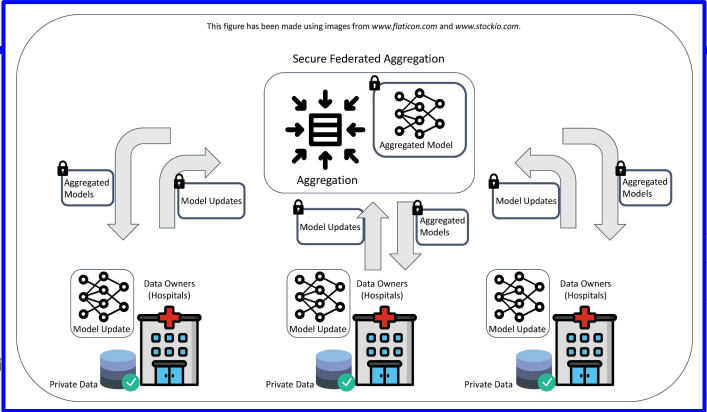

Honest but curious Aggregator and/or Data Owners

- This adversary

- Does not deviate from the prescribed steps.

- May still try to learn information from the data exchanged.

Private Aggregation with Privacy-Enhancing Technologies (PETs)

- PET methods can help to counter the confidentiality threats from the Aggregator and DOs (e.g., Homomorphic Encryption, Differential Privacy, etc.).

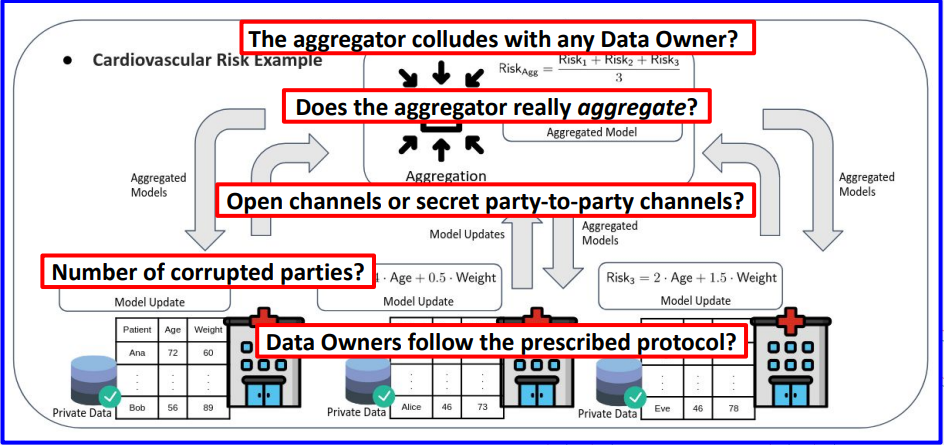

Into the dark side: Getting Malicious.

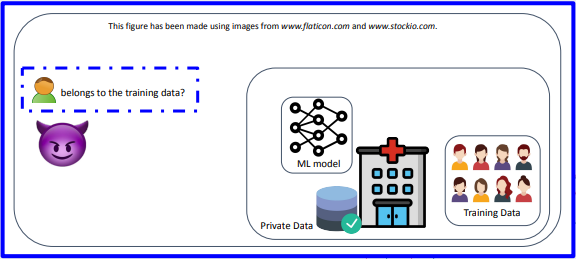

Malicious Aggregator and/or Data Owners

- This adversary

- May actively deviate from the prescribed steps to try to learn information from the data exchanged.

![]()

Some possible fixes (without PETs)

- Malicious Aggregators -> Adding redundancy in the aggregation.

If we add extra parties in charge of the aggregation, we can check whether all of them provide the same aggregation. - Malicious Data Owners -> Trying new aggregation rules.

We could have a more robust aggregation by considering, for example, the median instead of the mean.

Privacy metrics: Can we measure the Privacy Leakage?

Privacy metric in TRUMPET

- Should provide a “score” proportional to the privacy risks in the FL implementation. We could need more than one metric depending on the particular attack…

- Some possible examples for this score

- Measure the effectiveness of the State of the Art attacks.

- Measure the remaining privacy budget.

- How much y tells us about x?

- If we have a statistical model for x and y, the mutual information comes naturally as a measure of leakage.

- We have defined a privacy metric for membership inference attacks based on the mutual information between the membership variable for the target record and the observations.

Conclusions

- Federated Learning (FL) appeared as a promising solution to train data coming from different sources.

- Only parameter updates are exchanged.

- Data is never directly shared.

- Despite its potential, FL introduces several relevant security and privacy challenges.

- To mitigate these issues:

- More robust architectures can be designed.

- PET (Privacy Enhancing Technologies) techniques can be incorporated (SMPC, HE, DP, ZKPs, etc).

- In TRUMPET we propose mechanisms to quantify the privacy leakage caused by the exchanged updates.