EC3 Clients

EC3 Clients: EC3aaS & EC3 CLI

In

this section of the course, we will review the two clients that the EC3

tool offers to the users, and the specific capabilities of each one.

You can test by your own the capabilities of EC3aaS if you have

credentials to access the vo.access.egi.eu VO in EGI by following the

example described in the first section of this topic. Finally, we

encourage you to take the brief questionnaire to check if you understood

all the key concepts behind the two EC3 clients. Let's start!

1.- Web Interface (EC3aaS)

EC3aaS is the graphical interface of EC3. It facilitates the usage of EC3 to non-experienced users by an user-friendly web interface that allows to easily deploy and configure a virtual elastic cluster on several cloud providers, including EGI FedCloud. EC3aaS can be found inside the EGI AoD. This program allows small laboratories and individual researchers the access to a wide range of computational resources and on-line services to manage and analyse large amount of data. Inside this service we find the EC3 portal:

The EC3 AoD portal enables to launch virtual elastic clusters on top of EGI FedCloud resources using the EC3 tool.

It only requires the EGI checking account (and vo.access.egi.eu VO) to access to the service.

The user is guided step by step in the deployment process.

Documentation and tutorials are available, i.e. configuring a Galaxy cluster for data intensive research

Let's have a look to the service and how to use it to deploy your cluster!

Figure 1.- EC3aaS homepage.

The first step to access the EC3 portal is to authenticate with your EGI Checkin credentials.

Once logged you will see on the right-top corner your user's full name.

These credentials will be used to interact with EGI Cloud Compute

providers. Then the user, in order to configure and deploy a Virtual

Elastic Cluster using EC3aaS, accesses the homepage and selects “Deploy

your cluster!” (Fig. 1). With this action, the web page will show

different Cloud providers supported by the AoD web interface version of

EC3: EGI Cloud Compute or HelixNebula Cloud.

The

next step, then, is to choose the Cloud provider where the cluster will

be deployed (Fig. 2). For this tutorial, let's choose the EGI FedCloud

provider.

Figure 2. List of Cloud providers supported by EC3aaS.

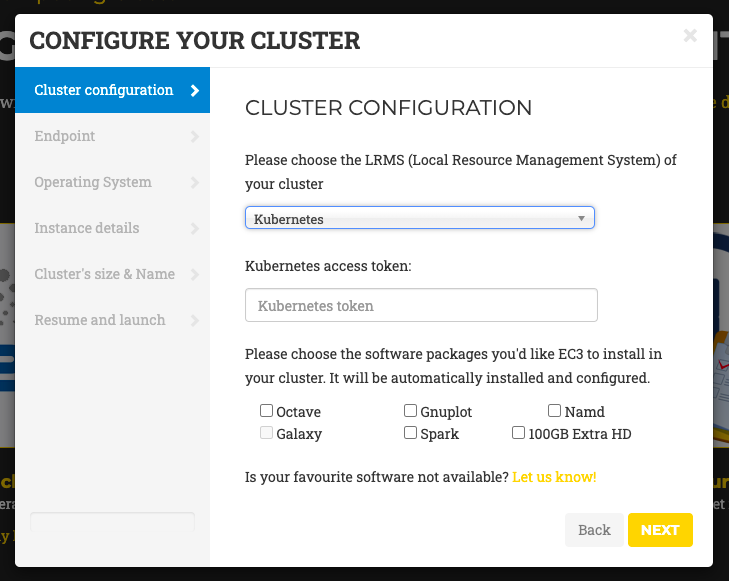

When the user chooses the EGI Cloud Compute provider a wizard pops up (Fig. 3). This wizard will guide the user during the configuration process of the cluster, allowing the selection of the Cloud site where the VMs will be deployed, the operating system, the type of LRMS system to use, the characteristics of the nodes, the maximum number of cluster nodes or the software packages to be installed.

Figure 3. Wizard to configure and deploy a virtual cluster in EGI Cloud Compute.

Specifically, the wizard steps are:

Cluster Configuration: the user can choose the Local Resource Management System preferred to be automatically installed and configured by EC3. Currently, SLURM, Torque, Grid Engine, Mesos (+ Marathon + Chronos), Kubernetes (and the option to add a security token), ECAS, Nomad and OSCAR are supported. Also a set of common software packages is available to be installed in the cluster, Spark, Galaxy (only in case of SLURM clusters), GNUPlot or Octave. EC3 will install and configure them automatically in the contextualization process. If the user needs another software to be installed in his cluster, a new Ansible recipe can be developed and added to EC3 by using the CLI (Command-Line based) interface.

Endpoint: the user has to choose one of the EGI Cloud Compute sites that provides support to the vo.access.egi.eu VO. The list of sites is automatically obtained from the EGI AppDB information system. In case that the site has some errors in the Argo Monitoring System a message (CRITICAL state!) will be added to the name. You can still use this site but it may fail due to this errors.

Operating System: the user chooses the OS of the cluster from the list of available Operating Systems that are provided by the selected Cloud site (also obtained from AppDB).

Instance details: the user must indicate the instance details, like the number of CPUs or the RAM memory, for the front-end and also the working nodes of the cluster (also obtained from AppDB).

Cluster’s size & Name: here, the user has to select the maximum number of nodes of the cluster (from 1 to 10), without including the front-end node. This value indicates the maximum number of working nodes that the cluster can scale. Remember that, initially the cluster only is created with the front-end, and the nodes are powered on on-demand. Also a name for the cluster (that must be unique) is required to identify the cluster.

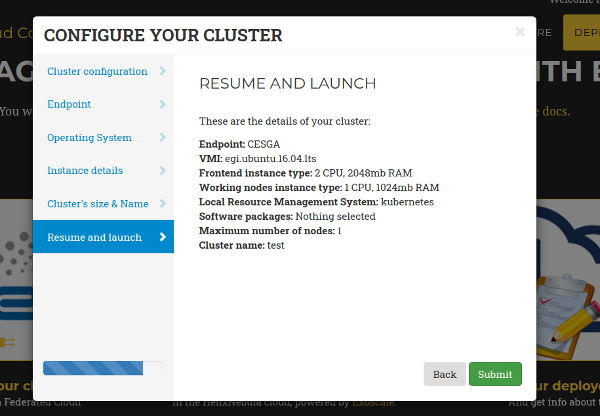

Resume and Launch: a summary of the chosen configuration of the cluster is shown to the user at the last step of the wizard, and the deployment process can start by clicking the Submit button (Fig. 4).

Figure 4. Resume and Launch: final Wizard step.

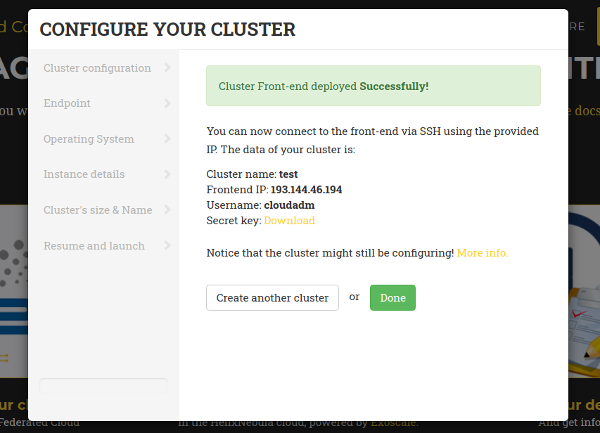

Finally, when all the steps of the wizard are fulfilled correctly, the submit button starts the deployment process of the cluster. Only the front-end will be deployed, because the working nodes will be automatically provisioned by EC3 when the workload of the cluster requires them. When the virtual machine of the front-end is running, EC3aaS provides the user with the necessary data to connect to the cluster (Fig. 5) which is composed by the username and SSH private key to connect to the cluster, the front-end IP and the name of the cluster.

Figure 5. Information received by the user when a deployment succeeds.

The cluster may not be configured when the IP of the front-end is returned by the web page, because the process of configuring the cluster is a batch process that takes several minutes, depending on the chosen configuration. However, the user is allowed to log in the front-end machine of the cluster since the moment it is deployed. To know if the cluster is configured, the command is_cluster_ready can be used. It will check if the configuration process of cluster has finished:

user@local:~$ssh -i key.pem <username>@<front_ip> ubuntu@kubeserverpublic:~$ is_cluster_ready Cluster configured!

If the the command is_cluster_ready is not found it means that the cluster is already being configured.

1.1.- Management of deployed clusters

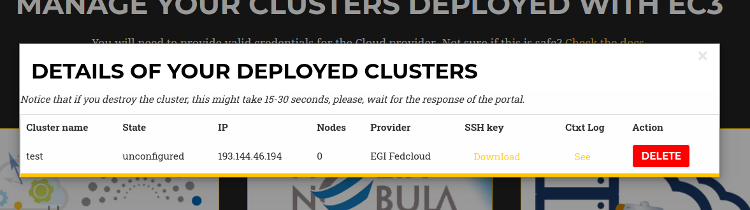

You can get a list of all your deployed clusters choosing the “Manage your deployed clusters” option (right in Fig. 2). It will show a list with the details of the clusters launched by the user. The list will show the following information: Cluster name (specified by the user on creation), the state, front-end public IP, number of working nodes deployed. It will also enable the user to download the SSH private key needed to access the front-end node and the contextualization log to see all the configuration steps performed. This log will enable the user to verify the current status of the configuration of the cluster, and check for errors in case that the cluster is not correctly configured (unconfigured state). Finally it also offers a button to delete the cluster.

When the deletion process finishes successfully, the front-end of the cluster and all the working nodes had been destroyed and a message is shown to the user informing the success of the operation. If an error occurs during the deleting process, an error message is returned to the user.

Figure 7. List of Clusters deployed by the active user.

Notice

that EC3aaS offers basic actions, that are create, list and destroy

virtual clusters. However, it does not offer all the capabilities of

EC3, like hybrid clusters or the reconfiguration of the clusters. Those

capabilities are considered advanced aspects of the tool and are only

available via the EC3 Command-line Interface.

More documentation regarding EC3aaS can be found here: https://ec3.readthedocs.io/en/latest/ec3aas.html

2.- Command line interface (EC3 CLI)

The EC3 CLI is a more powerful client interface than the Web interface, because it includes all the capabilities EC3 offers. It is programmed in Python and offers a common Unix command-line interface. Specifically, EC3 CLI provides:

More control over the cluster (reconfigure, clone, migrate, stop, restart).

Support for hybrid clusters.

Support for golden images.

However, this interface is more complex to use than the EC3aaS option. This is recommended for experienced users or users with specific needs and experience working with Unix command-line interfaces. The user will have more capabilities and the ability to customize EC3's behaviour, and even add new templates, but they also need to know low-level details of the tool that EC3aaS hides. For example, he/she has to define an authorization file. Let's have a look to the CLI and the specific options it offers!

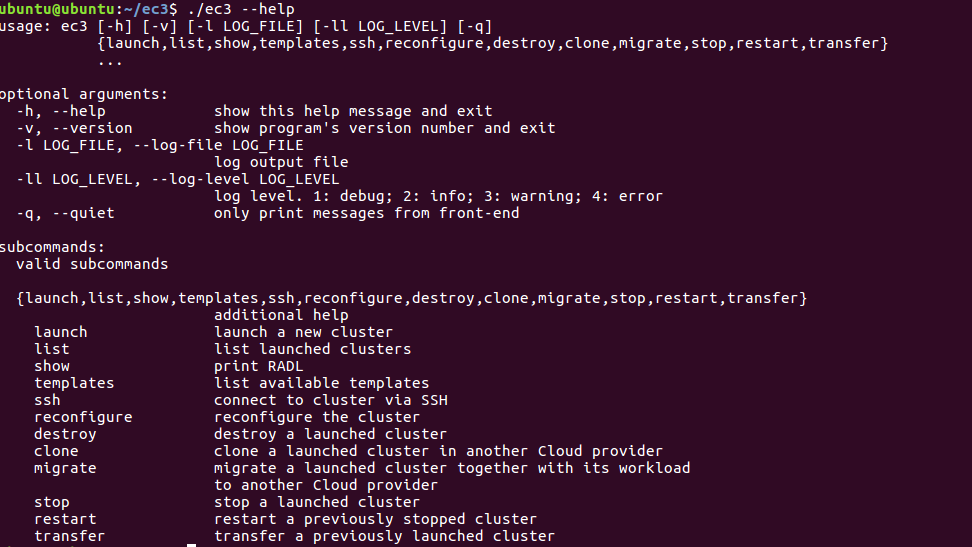

Figure 8.- Output of the EC3 help command where all the available actions are shown.

In a nutshell, EC3 CLI has the following available options:

Command launch: this is the command used to create a cluster. In there you have to point out to the recipes you want to use to configure the cluster.

Command reconfigure: this option reconfigures a previously deployed cluster. It can be called after a failed deployment (resources provisioned will be maintained and a new attempt to configure them will take place). It can also be used to apply a new configuration to a running cluster.

Command ssh: this command opens a SSH session to the infrastructure front-end of a previously deployed cluster.

Command destroy: this option undeploys the cluster and removes the associated information in the local database. It also removes all the additional resources created with the cluster, like security groups or additional HDD.

Command show: the command prints the RADL description of the cluster stored in the local database. It also returns the logs of the contextualization process of the cluster.

Command list: this command prints a table with information about the clusters that have been launched with EC3 CLI. For each cluster, the next information is shown: name, state, IP of the front-end, number of nodes and cloud provider.

Command templates: this option displays basic information about the available templates like name, kind and a summary description.

Command clone: the command clones an infrastructure front-end previously deployed from one provider to another.

Command migrate: the command migrates a previously deployed cluster and its running tasks from one provider to another. It is mandatory that the original cluster to migrate has been deployed with SLURM and BLCR, if not, the migration process can’t be performed. Also, this operation only works with clusters which images are selected by the VMRC, it does not work if the URL of the VMI/AMI is explicitly written in the system RADL.

Command stop: to stop a cluster to later continue using it, issue this command. This allows you to save resources from the cloud provider if the cluster is not being used.

Command restart: to restart an already stopped cluster, use this command.

Command transfer: to transfer an already launched cluster that has not been transfered to the internal IM, use this command.

EC3 CLI also supports the use of golden images. Golden images are a mechanism to accelerate the contextualization

process of working nodes in the cluster. They are created when the first

node of the cluster is deployed and configured. It provides a

preconfigured AMI specially created for the cluster, with no interaction

with the user required. Each golden image has a unique id that relates

it with the infrastructure. Golden images are also deleted when the

cluster is destroyed. More info regarding this feature is available in the official documentation.

2.1.- Authorization file

The authorization file is essential to use EC3 CLI, and it's the same used in IM Client. It stores in plain text the credentials to access the cloud providers and the IM service. Each line of the file is composed by pairs of key and value separated by semicolon, and refers to a single credential. The key and value should be separated by ” = “, that is an equals sign preceded and followed by one white space at least, like this:

id = id_value ; type = value_of_type ; username = value_of_username ; password = value_of_password

Values can contain “=”, and “\n” is replaced by carriage return. There are several available keys. depending on the cloud provider you use. You can have a look to all the available options here. The basic ones are:

typeindicates the service that refers the credential. The services supported areInfrastructureManager,VMRC,OpenNebula,EC2,OpenStack,OCCI,LibCloud,Docker,GCE,Azure, andLibVirt.usernameindicates the user name associated to the credential. In EC2 it refers to the Access Key ID. In Azure it refers to the user Subscription ID. In GCE it refers to Service Account’s Email Address.passwordindicates the password associated to the credential. In EC2 it refers to the Secret Access Key. In GCE it refers to Service Private Key. See how to get it and how to extract the private key file from here info).tenantindicates the tenant associated to the credential. This field is only used in the OpenStack plugin.hostindicates the address of the access point to the cloud provider. This field is not used in IM and EC2 credentials.idassociates an identifier to the credential. The identifier should be used as the label in the deploy section in the RADL.

Notice that the user credentials that you specify are only employed

to provision the resources (Virtual Machines, security groups,

keypairs, etc.) on your behalf. No other resources will be

accessed/deleted.

2.2.- Templates and recipes

EC3 recipes are described in a superset of RADL,

which is a specification of virtual machines (e.g., instance type, disk

images, networks, etc.) and contextualization scripts. EC3 also

supports TOSCA templates (available here), but in this course we are focused on the RADL version.

In EC3, there are three types of templates:

images, that includes thesystemsection of the basic template. It describes the main features of the machines that will compose the cluster, like the operating system or the CPU and RAM memory required;main, that includes thedeploysection of the frontend. Also, they include the configuration of the chosen LRMS.component, for all the recipes that install and configure software packages that can be useful for the cluster.

In order to deploy a cluster with EC3, it is mandatory to indicate in the ec3 launch command, one recipe of kind main and one recipe of kind image. The component recipes are optional, and you can include all that you need.

In the next section of the course we will work directly with EC3 CLI and we provide you also some hints on how to develop your own recipe. However, an extensive documentation regarding templates creation is available in these links:

You reached the end of this section! Now, please, go ahead with the brief questionnaire we prepared for you: